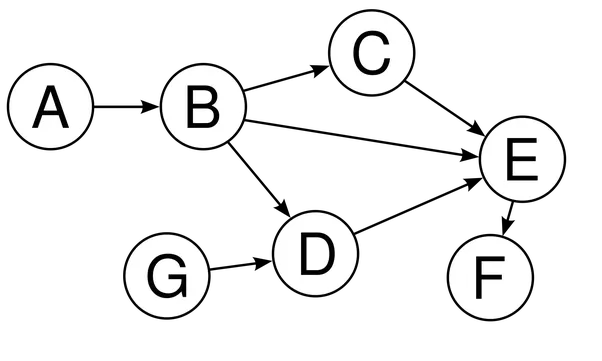

The basic concept behind the Hkube pipeline is DAG

Directed Acyclic Graph is a graph consisting of nodes connected

with edges that have a direction: A -> B -> C.

The DAG structure allowing to:

Hkube store and execute pipelines using Restful API. Hkube support three types of pipeline executions: Raw, Stored and Node.

When you run Raw pipeline, you are actually creating new execution each time.

It means that you need to define all the pipeline details every time you run it.

/exec/raw - full details

{ "name": "simple", "nodes": [ { "nodeName": "green", "algorithmName": "green-alg", "input": [ true, "@flowInput.urls", 256 ] } ], "flowInput": { "files": { "link": "links-1" } }, "options": { "batchTolerance": 100, "progressVerbosityLevel": "debug", "ttl": 3600 }, "webhooks": { "progress": "http://localhost:3003/webhook/progress", "result": "http://localhost:3003/webhook/result" }, "priority": 3 }

With Stored pipeline, you dont need to define all the pipeline details.

You just need to store the pipeline once and then run it with the same or different input.

/exec/stored - same flowInput

{ "name": "simple" }

/exec/stored - different flowInput

{ "name": "simple", "flowInput": { "urls": ["google.com"] } }

/exec/stored - another flowInput

{ "name": "simple", "flowInput": { "urls": ["facebook.com"] } }